Deploying a Runpod Runner

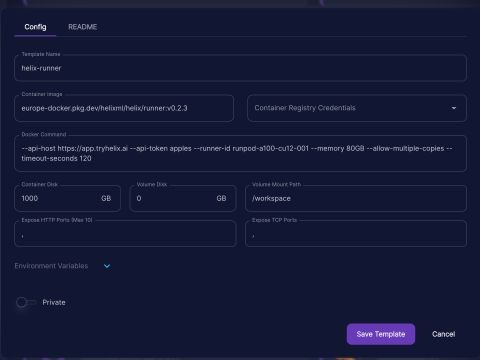

Create a runpod GPU pod template like this:

Don’t use the version or arguments in the screenshot above, use the details below

Container image:

registry.helixml.tech/helix/runner:<LATEST_TAG>Where <LATEST_TAG> is the tag of the latest release in the form X.Y.Z from https://get.helixml.tech/latest.txt

You can also use X.Y.Z-small to use an image with Llama3-8B and Phi3-Mini pre-baked (llama3:instruct,phi3:instruct), or X.Y.Z-large for one with all our supported Ollama models pre-baked. Warning: the large image is large (over 100GB), but it saves you re-downloading the weights every time the container restarts! We recommend using X.Y.Z-small and setting the RUNTIME_OLLAMA_WARMUP_MODELS environment variable to llama3:instruct,phi3:instruct to get started (in the runpod UI), so the download isn’t too big. If you want to use other models in the interface, don’t specify RUNTIME_OLLAMA_WARMUP_MODELS environment variable, and it will use the defaults (all models).

Docker Command:

--api-host https://<YOUR_CONTROLPLANE_HOSTNAME> --api-token <RUNNER_TOKEN_FROM_ENV> --runner-id runpod-001 --memory <GPU_MEMORY>GB --allow-multiple-copiesReplace <RUNNER_TOKEN_FROM_ENV> and <GPU_MEMORY> (e.g. 24GB) accordingly. You might want to update the runner-id with a more descriptive name, and make sure it’s unique. That ID will show up in the helix dashboard at https://<YOUR_CONTROLPLANE_HOSTNAME>/dashboard for admin users.

Set Container Disk to 500.

Then start pods from your template, customizing the docker command accordingly.

Configuring a Runner

- You can update

RUNTIME_OLLAMA_WARMUP_MODELSto match the specific Ollama models you want to enable for your Helix install, see available values. - Helix will download the weights for models specified in

RUNTIME_OLLAMA_WARMUP_MODELSat startup if they are not baked into the image. This can be slow, especially if it runs in parallel across many runners, and can easily saturate your network connection. This is why using the images with pre-baked weights (-smalland-largevariants) is recommended. - Warning: the

-largeimage is large (over 100GB), but it saves you re-downloading the weights every time the container restarts! We recommend usingX.Y.Z-smalland setting theRUNTIME_OLLAMA_WARMUP_MODELSvalue tollama3:instruct,phi3:instructto get started, so the download isn’t too big. If you want to use other models in the Helix UI and API, delete this-e RUNTIME_OLLAMA_WARMUP_MODELSline from below, and it will use the defaults (all models). The default models will take a long time to download! - Update

<GPU_MEMORY>to correspond to how much GPU memory you have, e.g. “80GB” or “24GB” - You can add

--gpus 1before the image name to target a specific GPU on the system (starting at 0). If you want to use multiple GPUs on a node, you’ll need to run multiple runner containers (in that case, remember to give them different names) - Make sure to run the container with

--restart alwaysor equivalent in your container runtime, since the runner will exit if it detects an unrecoverable error and should be restarted automatically - If you want to run the runner on the same machine as the controlplane, either: (a) set

--network hostand set--api-host http://localhost:8080so that the runner can connect on localhost via the exposed port, or (b) use--api-host http://172.17.0.1:8080so that the runner can connect to the API server via the docker bridge IP. On Windows or Mac, you can use--api-host http://host.docker.internal:8080 - Helix will currently also download and run SDXL and Mistral-7B weights used for fine-tuning at startup. These weights are not currently pre-baked anywhere. This can be disabled with

RUNTIME_AXOLOTL_ENABLED=falseif desired. If running in a low-memory environment, this may cause CUDA OOM errors at startup, which can be ignored (at startup) since the scheduler will only fit models into available memory after the startup phase. - If you want to use text fine-tuning, you need to set the environment variable

HF_TOKENto a valid Huggingface token, then you now need to accept sharing your contact information with Mistral here and then fetch an access token from here and then specify it in this environment variable.